the four painters from takuya on Vimeo.

Abstract

Deep Learning is newly emerged Machine Learning technology and it has high quality in image recognition.

With this technology, the computer now can understand artist’s painting style and can imitate it.

This means people can see the ‘scenes’ where the painters were seeing because a work is a scene seen through the painter’s eyes.

This video is trying to reproduce four famous artists’ view in their daily life by imitating their painting style.

The computer now can convert picture into image in style of artistic style

There’s a number of stuffs which allows to leverage Machine Learning tecnnology in daily use for everyone who is even not a professional in Neural Network, Deep Learning, etc.

The Deep Learning has very high accuracy in image recognition, so recently I made an iOS app called Ramelier which recommends ramen shops from ramen images.

This entry introduces a video work that I created with Deep Learning.

This technology can imitate painting style from artist’s work: New Neural Algorithm Can ‘Paint’ Photos In Style Of Any Artist From Van Gogh To Picasso.

And that means that the computer now can understand artist’s sense!

Fortunately, there’re people who implemented this algorithm easy to use for frameworks such as Chainer and Torch.

I chose neural-style this time.

Make my painting style imitated

I also like drawing, so tried imitating my painting style as following:

It seems to be difficult to learn the painting style because this work is painted with neither oil nor watercolor.

I chose below picture to be converted which is a view in Europe:

Here is the result and it’s working fine!

We can see the ‘scenes’ where the painters were seeing?

I’m not a famous painter though, as one of painter, I can say that a work is a scene seen through the painter’s eyes.

According to the brain science, human doesn’t recognize image as he/she saw, and the human summerizes the image to reduce information.

With this summerization, unnecessaries are removed and important points are emphasized from the image.

In other words, the scnene is possibly a view that emphasized with his/her feelings of past and now.

If it’s true, can we think the painting style is a part of process of the artist’s summerization?

We may be possible to reproduce their view in their daily life a little by creating video with imitating their painting style.

the four painters

This video consists of four short videos which are generated in style of four famous artists.

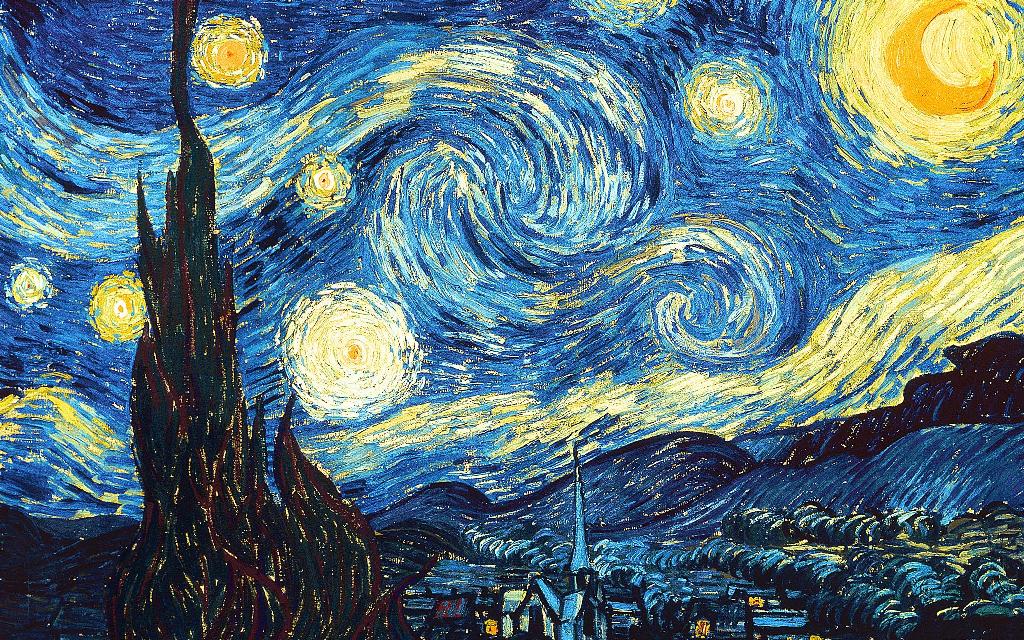

Vincent van Gogh: Jellyfish

Starry Night:

Jellyfish:

Result:

Edvard Munch: Sunlight

The scream:

Sunlight:

Result:

Kiyoshi Yamashita: Rose

Fire works in Nagaoka:

Rose:

Result:

This is breath-taking, like a jeweled flower!

Katsushika Hokusai: Wave

Kabarinagashi:

Wave:

Result:

took 1 month with my iMac

I used my iMac(Late 2013) at home and that was tough.

This iMac has nVidia video card so fortunately I could use CUDA framework.

Split image into 4 parts

My iMac has 2GB of GPU memory because it’s for home-use.

It’s insufficient size for larger image output.

It can output a little bit larger result when its display resolution set down to 640px x 480px.

It still needed to split images into 4 parts for 720p high quality video.

It caused a side effect which each frame has a border at the joint of the parts.

200-400 iterations

For getting better result, it needs about 1000 iterations but it takes much time as iteration increases.

300 frames at 1 video, 1200 images after splitting each frame.

It will take a month if it performs 1000 iters for 1 video.

So I decided to limit iters to 200-400 which is minimum number possibly.

Deep Learning is new brush

I realized it sometimes unexpectedly got beautiful results.

I like Rose in style of Kiyoshi Yamashita, it’s so breathtaking.

It must be so fun if we could use this technology more easily and casually.

Painting style mash-up will be emerged as DJs are mashing up music every day!

In the 北斎 style image it’s treatment of features doesn’t match the treatment afforded by the 北斎 original. On the original you will notice that the features in the background are radically simplified as compared to the middle and foreground. Have you considered using depth information as an input vector to your DLNN?

いいねいいね

Hi Matt,

Actually the style image of 北斎 is cropped into smaller image due to memory issue.

As you mentioned, the treatment of features may not match well.

It needs to perform more iterations and input full image as the style source for better result.

いいねいいね

Have you considered adding all the works (or >1) of a specific painter and see the output. I’m curious if there is a huge impact on the output based on the entire lifetime work of an artist.

Very interesting stuff.

いいねいいね

Hi Sam,

I tried several works of a specific painter and got big different outputs for each work because this algorithm is strongly based on patterns in an image.

And it can also merge multiple styles so it’s possible to learn the entire lifetime work of an artist.

I’m not sure what the output will be like though that’s very interesting.

いいねいいね

Very nice. Thank you for sharing!

いいねいいね

Glad to hear that!

いいねいいね

Amazing! Too bad you had to split the video, but it still looks great, I especially like the Munch-ified leaves.

いいねいいね

I’ve seen similar works popping up on the internet, that is artworks created with deep learning. I wonder where this is headed… exciting times ahead, that’s for sure 🙂

いいねいいね